Now that we have one VM serving a web site, it is a common pattern to deploy not only one VM. Use multiple VMs to distribute the load. In Azure, this feature is called a virtual machine scale set (see the DOCs).

To build this in Terraform we need the azurerm_linux_virtual_machine_scale_set resource type. The documentation shows a sample on how to use it.

Please read first!

But CAUTION - I have done everything several times and tried a lot of possible parameters to deploy the scales set including the Apache webserver. I did not find out, why the configuration of the custom script extension does not work during the initial deployment. Only if you change the VM count after the deployment, the custom script will be deployed. You can see this issue here.

So I go through the whole sample and afterward I would like to show, how I would build the sample out of Yevgeniy Brikmann’s book by leveraging app services in Azure.

Let’s go first the way thru the virtual machine scale set:

We need a resource group to deploy everything to

1 | ### Resource Group |

In this sample, we start using tags at the resource group level for the App we deployed, the source and what kind of environment we have. Also, I want to establish a naming convention based on the Microsoft best practices shared in this article.

So for a resource group, there is the suggested pattern

rg-

Next - the vNet

1 |

|

În the VNet we have to define the internal subnet for the VMs in the scale set

1 | ### Subnet |

In my script, I add the following resource in front of the VM scale set definition. Because I want to add a FQDN to public IP assign to the load balancer. There is a helpful resource in Terraform to build a random String to be used for the FQDN:

1 | ### Random FQDN String |

Implement a Loadbalancer into our script

The common design pattern is to deploy a load balancer in front of the VMs in the scale set. With this, the incoming traffic can be distributed between the virtual machines in the scale set. We add a load balancer definition to the script:

1 | ### Loadbalancer definition |

The load balancer needs some more configuration. We need to define a backend IP pool as well as a probe to check the health status of VMs in the backend pool:

1 | ### Define the backend pool |

The last step in the configuration is the rule for the load balancing - so which port should be balanced:

1 | ### Define the lb rule |

Now we have deployed the basic components of our architecture and can go ahead. As in our sample for a single VM it is important to define the network security group. But we do not need the SSH port been opened, we just need the port 80 on our webserver.

1 | ### Define the NSG |

Now we can plan our script and apply it to our Azure Account. Now that we have out VM scale set up and running we need our Webserver in the machine again. To achieve this, we need to deploy a new resource - the “azurerm_virtual_machine_scale_set_extension”_. It is somehow the same kind of extension we used for the single VM - so our additional entry in the script will look like this:

1 | ### Add the Webserver to the VMSS |

During my research on the web I found that with terraform version 0.12 the function jsoncode has been implemented. With this, it is easier to convert a given string to JSON. I used this function for the commandToExcecute attribute.

But

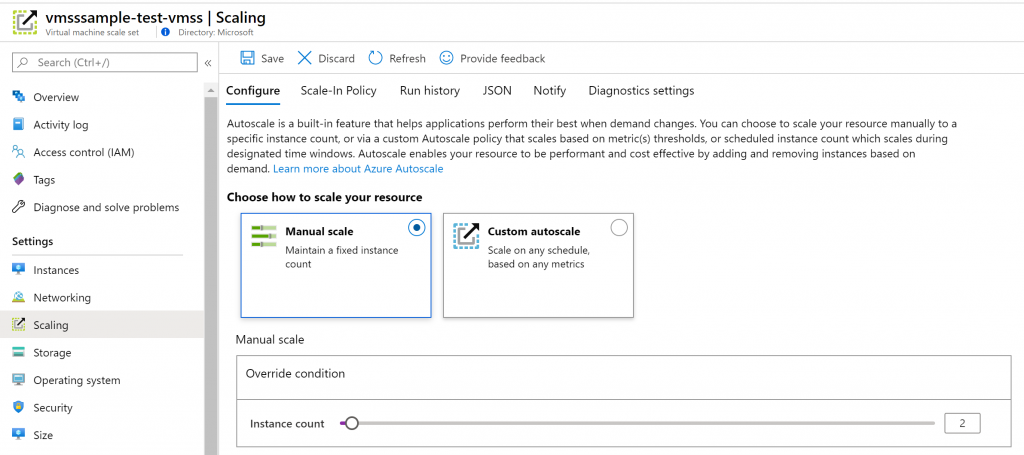

If we now deploy our script to azure we will have all components in place to have a virtual machine scale set with a web server installed. As mentioned at the beginning the custom script extension does not work as expected. If you go to the portal and change the number of deployed instances in the scaling option of the scale set, the custom script extensions will be deployed to the VMs. If we then browse to URL of the public IP - we will have the apache web server default website been presented.

So after scaling up - our script will show our desired state when browsing to the FQDN.

The next post will then show the deployment using Azure App Services to solve the same challenge and add a real website to that script.